From Satisficing to Optimization in Reinforcement Learning

FWF project PAT6918624 (2025-2027)

Project leader: Ronald Ortner

Department für Mathematik und Informationstechnologie

Lehrstuhl für Informationstechnologie

Montanuniversität Leoben

Franz-Josef-Straße 18

A-8700 Leoben

Tel.: +43 3842 402-1503

Fax: +43-3842-402-1502

E-mail: ronald.ortner(at)unileoben.ac.at

About the project

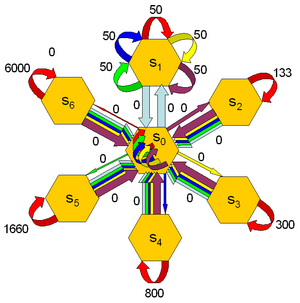

Reinforcement learning (RL) has been successful in applications, but theory has not been able to guarantee reliability and robustness of the used algorithms. One reason is that RL theory focuses on optimization, while practical RL problems are task-oriented so that optimality doesn't play any role. We aim at a restart of RL theory by replacing the optimality paradigm by a criterion based on satisficing, which will alleviate the development and analysis of algorithms. In the precursor project we were able to give first regret bounds in the bandit as well as in the general MDP setting that unlike classic regret bounds are independent of the horizon. Now we are interested in

- optimizing the parameters in these bounds,

- obtaining respective lower bounds,

- taking a closer look at the regime between satisficing and optimization, and

- alternative performance measures that unlike the regret are not worst-case.